Python Reference¶

nomad.metainfo¶

nomad.config¶

This module describes all configurable parameters for the nomad python code. The configuration is used for all executed python code including API, worker, CLI, and other scripts. To use the configuration in your own scripts or new modules, simply import this module.

All parameters are structured into objects for two reasons. First, to have

categories. Second, to allow runtime manipulation that is not effected

by python import logic. The categories are choosen along infrastructure components:

mongo, elastic, etc.

This module also provides utilities to read the configuration from environment variables and .yaml files. This is done automatically on import. The precedence is env over .yaml over defaults.

-

class

nomad.config.NomadConfig(**kwargs)¶ A class for configuration categories. It is a dict subclass that uses attributes as key/value pairs.

-

__init__(**kwargs)¶ Initialize self. See help(type(self)) for accurate signature.

-

-

nomad.config.load_config()¶ Loads the configuration from nomad.yaml and environment.

nomad.infrastructure¶

This module provides function to establish connections to the database, searchengine, etc.

infrastructure services. Usually everything is setup at once with setup(). This

is run once for each api and worker process. Individual functions for partial setups

exist to facilitate testing, aspects of nomad.cli, etc.

-

nomad.infrastructure.elastic_client= None¶ The elastic search client.

-

nomad.infrastructure.mongo_client= None¶ The pymongo mongodb client.

-

nomad.infrastructure.setup()¶ Uses the current configuration (nomad/config.py and environment) to setup all the infrastructure services (repository db, mongo, elastic search) and logging. Will create client instances for the databases and has to be called before they can be used.

-

nomad.infrastructure.setup_files()¶

-

nomad.infrastructure.setup_mongo(client=False)¶ Creates connection to mongodb.

-

nomad.infrastructure.setup_elastic(create_mappings=True)¶ Creates connection to elastic search.

-

exception

nomad.infrastructure.KeycloakError¶

-

class

nomad.infrastructure.Keycloak¶ A class that encapsulates all keycloak related functions for easier mocking and configuration

-

__init__()¶ Initialize self. See help(type(self)) for accurate signature.

-

auth(headers: Dict[str, str], allow_basic: bool = False) → Tuple[object, str]¶ Performs authentication based on the provided headers. Either basic or bearer.

- Returns

The user and its access_token

- Raises

-

basicauth(username: str, password: str) → str¶ Performs basic authentication and returns an access token.

- Raises

-

tokenauth(access_token: str) → object¶ Authenticates the given access_token

- Returns

The user

- Raises

-

add_user(user, bcrypt_password=None, invite=False)¶ Adds the given

nomad.datamodel.Userinstance to the configured keycloak realm using the keycloak admin API.

-

search_user(query: str = None, max=1000, **kwargs)¶

-

get_user(user_id: str = None, username: str = None, user=None) → object¶ Retrives all available information about a user from the keycloak admin interface. This must be used to retrieve complete user information, because the info solely gathered from tokens is generally incomplete.

-

-

nomad.infrastructure.reset(remove: bool)¶ Resets the databases mongo, elastic/calcs, and all files. Be careful. In contrast to

remove(), it will only remove the contents of dbs and indicies. This function just attempts to remove everything, there is no exception handling or any warranty it will succeed.- Parameters

remove – Do not try to recreate empty databases, remove entirely.

-

nomad.infrastructure.send_mail(name: str, email: str, message: str, subject: str)¶ Used to programmatically send mails.

- Parameters

name – The email recipient name.

email – The email recipient address.

messsage – The email body.

subject – The subject line.

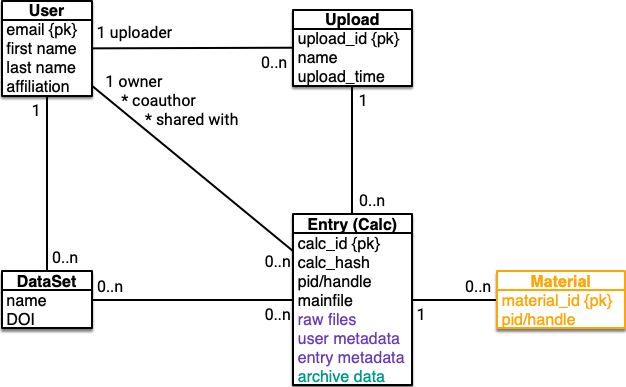

nomad.datamodel¶

Introduction¶

This is about the datamodel that is used to represent NOMAD entries in our databases and search engines. The respective data, also known as (repository) metadata is also part of the NOMAD Archive and the datamodel is also defined based on the NOMAD Metainfo (section metadata). It covers all information that users can search for and that can be easily rendered on the GUI. The information is readily available through the repo API.

See also the datamodel section in the introduction.

This module contains classes that allow to represent the core

nomad data entities (entries/calculations, users, datasets) on a high level of abstraction

independent from their representation in the different modules

nomad.processing, nomad.parsing, nomad.search, nomad.app.

Datamodel entities¶

The entities in the datamodel are defined as NOMAD Metainfo sections. They are treated similarily to all Archive data. The entry/calculation datamodel data is created during processing. It is not about representing every detail, but those parts that are directly involved in api, processing, mirroring, or other ‘infrastructure’ operations.

The class User is used to represent users and their attributes.

-

class

nomad.datamodel.User(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ A NOMAD user.

Typically a NOMAD user has a NOMAD account. The user related data is managed by NOMAD keycloak user-management system. Users are used to denote uploaders, authors, people to shared data with embargo with, and owners of datasets.

- Parameters

user_id – The unique, persistent keycloak UUID

username – The unique, persistent, user chosen username

first_name – The users first name (including all other given names)

last_name – The users last name

affiliation – The name of the company and institutes the user identifies with

affiliation_address – The address of the given affiliation

create – The time the account was created

repo_user_id – The id that was used to identify this user in the NOMAD CoE Repository

is_admin – Bool that indicated, iff the user the use admin user

-

m_def: Section = nomad.datamodel.datamodel.User:Section¶

-

user_id¶ An optimized replacement for Quantity suitable for primitive properties.

-

username¶ An optimized replacement for Quantity suitable for primitive properties.

-

created¶ To define quantities, instantiate

Quantityas a classattribute values in a section classes. The name of a quantity is automatically taken from its section class attribute. You can provide all other attributes to the constructor with keyword argumentsSee metainfo-sections to learn about section classes. In Python terms,

Quantityis a descriptor. Descriptors define how to get and set attributes in a Python object. This allows us to use sections like regular Python objects and quantity like regular Python attributes.Each quantity must define a basic data type and a shape. The values of a quantity must fulfil the given type. The default shape is a single value. Quantities can also have physical units. Units are applied to all values.

-

type¶ Defines the datatype of quantity values. This is the type of individual elements in a potentially complex shape. If you define a list of integers for example, the shape would be list and the type integer:

Quantity(type=int, shape=['0..*']).The type can be one of:

a build-in primitive Python type:

int,str,bool,floatan instance of

MEnum, e.g.MEnum('one', 'two', 'three')a section to define references to other sections as quantity values

a custom meta-info

DataType, see Environmentsa numpy dtype, e.g.

np.dtype('float32')typing.Anyto support any value

If set to dtype, this quantity will use a numpy array or scalar to store values internally. If a regular (nested) Python list or Python scalar is given, it will be automatically converted. The given dtype will be used in the numpy value.

To define a reference, either a section class or instance of

Sectioncan be given. See metainfo-sections for details. Instances of the given section constitute valid values for this type. Upon serialization, references section instance will represented with metainfo URLs. See Resources.For quantities with more than one dimension, only numpy arrays and dtypes are allowed.

-

shape¶ The shape of the quantity. It defines its dimensionality.

A shape is a list, where each item defines one dimension. Each dimension can be:

an integer that defines the exact size of the dimension, e.g.

[3]is the shape of a 3D spacial vectora string that specifies a possible range, e.g.

0..*,1..*,3..6the name of an int typed and shapeless quantity in the same section which values define the length of this dimension, e.g.

number_of_atomsdefines the length ofatom_positions

Range specifications define lower and upper bounds for the possible dimension length. The

*can be used to denote an arbitrarily high upper bound.Quantities with dimensionality (length of the shape) higher than 1, must be numpy arrays. Theire type must be a dtype.

-

is_scalar¶ Derived quantity that is True, iff this quantity has shape of length 0

-

unit¶ The physics unit for this quantity. It is optional.

Units are represented with the Pint Python package. Pint defines units and their algebra. You can either use pint units directly, e.g.

units.m / units.s. The metainfo provides a preconfigured pint unit registryureg. You can also provide the unit as pint parsable string, e.g.'meter / seconds'or'm/s'.

-

default¶ The default value for this quantity. The value must match type and shape.

Be careful with a default value like

[]as it will be the default value for all occurrences of this quantity.

Quantities are mapped to Python properties on all section objects that instantiate the Python class/section definition that has this quantity. This means quantity values can be read and set like normal Python attributes.

In some cases it might be desirable to have virtual and read only quantities that are not real quantities used for storing values, but rather define an interface to other quantities. Examples for this are synonyms and derived quantities.

-

derived¶ A Python callable that takes the containing section as input and outputs the value for this quantity. This quantity cannot be set directly, its value is only derived by the given callable. The callable is executed when this quantity is get. Derived quantities are always virtual.

-

cached¶ A bool indicating that derived values should be cached unless the underlying section has changed.

-

virtual¶ A boolean that determines if this quantity is virtual. Virtual quantities can be get/set like regular quantities, but their values are not (de-)serialized, hence never permanently stored.

-

-

repo_user_id¶ Optional, legacy user id from the old NOMAD CoE repository.

-

is_admin¶ To define quantities, instantiate

Quantityas a classattribute values in a section classes. The name of a quantity is automatically taken from its section class attribute. You can provide all other attributes to the constructor with keyword argumentsSee metainfo-sections to learn about section classes. In Python terms,

Quantityis a descriptor. Descriptors define how to get and set attributes in a Python object. This allows us to use sections like regular Python objects and quantity like regular Python attributes.Each quantity must define a basic data type and a shape. The values of a quantity must fulfil the given type. The default shape is a single value. Quantities can also have physical units. Units are applied to all values.

-

type Defines the datatype of quantity values. This is the type of individual elements in a potentially complex shape. If you define a list of integers for example, the shape would be list and the type integer:

Quantity(type=int, shape=['0..*']).The type can be one of:

a build-in primitive Python type:

int,str,bool,floatan instance of

MEnum, e.g.MEnum('one', 'two', 'three')a section to define references to other sections as quantity values

a custom meta-info

DataType, see Environmentsa numpy dtype, e.g.

np.dtype('float32')typing.Anyto support any value

If set to dtype, this quantity will use a numpy array or scalar to store values internally. If a regular (nested) Python list or Python scalar is given, it will be automatically converted. The given dtype will be used in the numpy value.

To define a reference, either a section class or instance of

Sectioncan be given. See metainfo-sections for details. Instances of the given section constitute valid values for this type. Upon serialization, references section instance will represented with metainfo URLs. See Resources.For quantities with more than one dimension, only numpy arrays and dtypes are allowed.

-

shape The shape of the quantity. It defines its dimensionality.

A shape is a list, where each item defines one dimension. Each dimension can be:

an integer that defines the exact size of the dimension, e.g.

[3]is the shape of a 3D spacial vectora string that specifies a possible range, e.g.

0..*,1..*,3..6the name of an int typed and shapeless quantity in the same section which values define the length of this dimension, e.g.

number_of_atomsdefines the length ofatom_positions

Range specifications define lower and upper bounds for the possible dimension length. The

*can be used to denote an arbitrarily high upper bound.Quantities with dimensionality (length of the shape) higher than 1, must be numpy arrays. Theire type must be a dtype.

-

is_scalar Derived quantity that is True, iff this quantity has shape of length 0

-

unit The physics unit for this quantity. It is optional.

Units are represented with the Pint Python package. Pint defines units and their algebra. You can either use pint units directly, e.g.

units.m / units.s. The metainfo provides a preconfigured pint unit registryureg. You can also provide the unit as pint parsable string, e.g.'meter / seconds'or'm/s'.

-

default The default value for this quantity. The value must match type and shape.

Be careful with a default value like

[]as it will be the default value for all occurrences of this quantity.

Quantities are mapped to Python properties on all section objects that instantiate the Python class/section definition that has this quantity. This means quantity values can be read and set like normal Python attributes.

In some cases it might be desirable to have virtual and read only quantities that are not real quantities used for storing values, but rather define an interface to other quantities. Examples for this are synonyms and derived quantities.

-

derived A Python callable that takes the containing section as input and outputs the value for this quantity. This quantity cannot be set directly, its value is only derived by the given callable. The callable is executed when this quantity is get. Derived quantities are always virtual.

-

cached A bool indicating that derived values should be cached unless the underlying section has changed.

-

virtual A boolean that determines if this quantity is virtual. Virtual quantities can be get/set like regular quantities, but their values are not (de-)serialized, hence never permanently stored.

-

-

is_oasis_admin¶ An optimized replacement for Quantity suitable for primitive properties.

-

static

get(*args, **kwargs) → nomad.datamodel.datamodel.User¶

-

full_user() → nomad.datamodel.datamodel.User¶ Returns a User object with all attributes loaded from the user management system.

The class Dataset is used to represent datasets and their attributes.

-

class

nomad.datamodel.Dataset(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ A Dataset is attached to one or many entries to form a set of data.

- Parameters

dataset_id – The unique identifier for this dataset as a string. It should be a randomly generated UUID, similar to other nomad ids.

name – The human readable name of the dataset as string. The dataset name must be unique for the user.

user_id – The unique user_id of the owner and creator of this dataset. The owner must not change after creation.

doi – The optional Document Object Identifier (DOI) associated with this dataset. Nomad can register DOIs that link back to the respective representation of the dataset in the nomad UI. This quantity holds the string representation of this DOI. There is only one per dataset. The DOI is just the DOI name, not its full URL, e.g. “10.17172/nomad/2019.10.29-1”.

pid – The original NOMAD CoE Repository dataset PID. Old DOIs still reference datasets based on this id. Is not used for new datasets.

created – The date when the dataset was first created.

modified – The date when the dataset was last modified. An owned dataset can only be extended after a DOI was assigned. A foreign dataset cannot be changed once a DOI was assigned.

dataset_type – The type determined if a dataset is owned, i.e. was created by the uploader/owner of the contained entries; or if a dataset is foreign, i.e. it was created by someone not necessarily related to the entries.

-

m_def: Section = nomad.datamodel.datamodel.Dataset:Section¶

-

dataset_id¶ An optimized replacement for Quantity suitable for primitive properties.

-

name¶ An optimized replacement for Quantity suitable for primitive properties.

-

user_id¶ An optimized replacement for Quantity suitable for primitive properties.

-

doi¶ An optimized replacement for Quantity suitable for primitive properties.

-

pid¶ An optimized replacement for Quantity suitable for primitive properties.

-

created¶ To define quantities, instantiate

Quantityas a classattribute values in a section classes. The name of a quantity is automatically taken from its section class attribute. You can provide all other attributes to the constructor with keyword argumentsSee metainfo-sections to learn about section classes. In Python terms,

Quantityis a descriptor. Descriptors define how to get and set attributes in a Python object. This allows us to use sections like regular Python objects and quantity like regular Python attributes.Each quantity must define a basic data type and a shape. The values of a quantity must fulfil the given type. The default shape is a single value. Quantities can also have physical units. Units are applied to all values.

-

type¶ Defines the datatype of quantity values. This is the type of individual elements in a potentially complex shape. If you define a list of integers for example, the shape would be list and the type integer:

Quantity(type=int, shape=['0..*']).The type can be one of:

a build-in primitive Python type:

int,str,bool,floatan instance of

MEnum, e.g.MEnum('one', 'two', 'three')a section to define references to other sections as quantity values

a custom meta-info

DataType, see Environmentsa numpy dtype, e.g.

np.dtype('float32')typing.Anyto support any value

If set to dtype, this quantity will use a numpy array or scalar to store values internally. If a regular (nested) Python list or Python scalar is given, it will be automatically converted. The given dtype will be used in the numpy value.

To define a reference, either a section class or instance of

Sectioncan be given. See metainfo-sections for details. Instances of the given section constitute valid values for this type. Upon serialization, references section instance will represented with metainfo URLs. See Resources.For quantities with more than one dimension, only numpy arrays and dtypes are allowed.

-

shape¶ The shape of the quantity. It defines its dimensionality.

A shape is a list, where each item defines one dimension. Each dimension can be:

an integer that defines the exact size of the dimension, e.g.

[3]is the shape of a 3D spacial vectora string that specifies a possible range, e.g.

0..*,1..*,3..6the name of an int typed and shapeless quantity in the same section which values define the length of this dimension, e.g.

number_of_atomsdefines the length ofatom_positions

Range specifications define lower and upper bounds for the possible dimension length. The

*can be used to denote an arbitrarily high upper bound.Quantities with dimensionality (length of the shape) higher than 1, must be numpy arrays. Theire type must be a dtype.

-

is_scalar¶ Derived quantity that is True, iff this quantity has shape of length 0

-

unit¶ The physics unit for this quantity. It is optional.

Units are represented with the Pint Python package. Pint defines units and their algebra. You can either use pint units directly, e.g.

units.m / units.s. The metainfo provides a preconfigured pint unit registryureg. You can also provide the unit as pint parsable string, e.g.'meter / seconds'or'm/s'.

-

default¶ The default value for this quantity. The value must match type and shape.

Be careful with a default value like

[]as it will be the default value for all occurrences of this quantity.

Quantities are mapped to Python properties on all section objects that instantiate the Python class/section definition that has this quantity. This means quantity values can be read and set like normal Python attributes.

In some cases it might be desirable to have virtual and read only quantities that are not real quantities used for storing values, but rather define an interface to other quantities. Examples for this are synonyms and derived quantities.

-

derived¶ A Python callable that takes the containing section as input and outputs the value for this quantity. This quantity cannot be set directly, its value is only derived by the given callable. The callable is executed when this quantity is get. Derived quantities are always virtual.

-

cached¶ A bool indicating that derived values should be cached unless the underlying section has changed.

-

virtual¶ A boolean that determines if this quantity is virtual. Virtual quantities can be get/set like regular quantities, but their values are not (de-)serialized, hence never permanently stored.

-

-

modified¶ To define quantities, instantiate

Quantityas a classattribute values in a section classes. The name of a quantity is automatically taken from its section class attribute. You can provide all other attributes to the constructor with keyword argumentsSee metainfo-sections to learn about section classes. In Python terms,

Quantityis a descriptor. Descriptors define how to get and set attributes in a Python object. This allows us to use sections like regular Python objects and quantity like regular Python attributes.Each quantity must define a basic data type and a shape. The values of a quantity must fulfil the given type. The default shape is a single value. Quantities can also have physical units. Units are applied to all values.

-

type Defines the datatype of quantity values. This is the type of individual elements in a potentially complex shape. If you define a list of integers for example, the shape would be list and the type integer:

Quantity(type=int, shape=['0..*']).The type can be one of:

a build-in primitive Python type:

int,str,bool,floatan instance of

MEnum, e.g.MEnum('one', 'two', 'three')a section to define references to other sections as quantity values

a custom meta-info

DataType, see Environmentsa numpy dtype, e.g.

np.dtype('float32')typing.Anyto support any value

If set to dtype, this quantity will use a numpy array or scalar to store values internally. If a regular (nested) Python list or Python scalar is given, it will be automatically converted. The given dtype will be used in the numpy value.

To define a reference, either a section class or instance of

Sectioncan be given. See metainfo-sections for details. Instances of the given section constitute valid values for this type. Upon serialization, references section instance will represented with metainfo URLs. See Resources.For quantities with more than one dimension, only numpy arrays and dtypes are allowed.

-

shape The shape of the quantity. It defines its dimensionality.

A shape is a list, where each item defines one dimension. Each dimension can be:

an integer that defines the exact size of the dimension, e.g.

[3]is the shape of a 3D spacial vectora string that specifies a possible range, e.g.

0..*,1..*,3..6the name of an int typed and shapeless quantity in the same section which values define the length of this dimension, e.g.

number_of_atomsdefines the length ofatom_positions

Range specifications define lower and upper bounds for the possible dimension length. The

*can be used to denote an arbitrarily high upper bound.Quantities with dimensionality (length of the shape) higher than 1, must be numpy arrays. Theire type must be a dtype.

-

is_scalar Derived quantity that is True, iff this quantity has shape of length 0

-

unit The physics unit for this quantity. It is optional.

Units are represented with the Pint Python package. Pint defines units and their algebra. You can either use pint units directly, e.g.

units.m / units.s. The metainfo provides a preconfigured pint unit registryureg. You can also provide the unit as pint parsable string, e.g.'meter / seconds'or'm/s'.

-

default The default value for this quantity. The value must match type and shape.

Be careful with a default value like

[]as it will be the default value for all occurrences of this quantity.

Quantities are mapped to Python properties on all section objects that instantiate the Python class/section definition that has this quantity. This means quantity values can be read and set like normal Python attributes.

In some cases it might be desirable to have virtual and read only quantities that are not real quantities used for storing values, but rather define an interface to other quantities. Examples for this are synonyms and derived quantities.

-

derived A Python callable that takes the containing section as input and outputs the value for this quantity. This quantity cannot be set directly, its value is only derived by the given callable. The callable is executed when this quantity is get. Derived quantities are always virtual.

-

cached A bool indicating that derived values should be cached unless the underlying section has changed.

-

virtual A boolean that determines if this quantity is virtual. Virtual quantities can be get/set like regular quantities, but their values are not (de-)serialized, hence never permanently stored.

-

-

dataset_type¶ To define quantities, instantiate

Quantityas a classattribute values in a section classes. The name of a quantity is automatically taken from its section class attribute. You can provide all other attributes to the constructor with keyword argumentsSee metainfo-sections to learn about section classes. In Python terms,

Quantityis a descriptor. Descriptors define how to get and set attributes in a Python object. This allows us to use sections like regular Python objects and quantity like regular Python attributes.Each quantity must define a basic data type and a shape. The values of a quantity must fulfil the given type. The default shape is a single value. Quantities can also have physical units. Units are applied to all values.

-

type Defines the datatype of quantity values. This is the type of individual elements in a potentially complex shape. If you define a list of integers for example, the shape would be list and the type integer:

Quantity(type=int, shape=['0..*']).The type can be one of:

a build-in primitive Python type:

int,str,bool,floatan instance of

MEnum, e.g.MEnum('one', 'two', 'three')a section to define references to other sections as quantity values

a custom meta-info

DataType, see Environmentsa numpy dtype, e.g.

np.dtype('float32')typing.Anyto support any value

If set to dtype, this quantity will use a numpy array or scalar to store values internally. If a regular (nested) Python list or Python scalar is given, it will be automatically converted. The given dtype will be used in the numpy value.

To define a reference, either a section class or instance of

Sectioncan be given. See metainfo-sections for details. Instances of the given section constitute valid values for this type. Upon serialization, references section instance will represented with metainfo URLs. See Resources.For quantities with more than one dimension, only numpy arrays and dtypes are allowed.

-

shape The shape of the quantity. It defines its dimensionality.

A shape is a list, where each item defines one dimension. Each dimension can be:

an integer that defines the exact size of the dimension, e.g.

[3]is the shape of a 3D spacial vectora string that specifies a possible range, e.g.

0..*,1..*,3..6the name of an int typed and shapeless quantity in the same section which values define the length of this dimension, e.g.

number_of_atomsdefines the length ofatom_positions

Range specifications define lower and upper bounds for the possible dimension length. The

*can be used to denote an arbitrarily high upper bound.Quantities with dimensionality (length of the shape) higher than 1, must be numpy arrays. Theire type must be a dtype.

-

is_scalar Derived quantity that is True, iff this quantity has shape of length 0

-

unit The physics unit for this quantity. It is optional.

Units are represented with the Pint Python package. Pint defines units and their algebra. You can either use pint units directly, e.g.

units.m / units.s. The metainfo provides a preconfigured pint unit registryureg. You can also provide the unit as pint parsable string, e.g.'meter / seconds'or'm/s'.

-

default The default value for this quantity. The value must match type and shape.

Be careful with a default value like

[]as it will be the default value for all occurrences of this quantity.

Quantities are mapped to Python properties on all section objects that instantiate the Python class/section definition that has this quantity. This means quantity values can be read and set like normal Python attributes.

In some cases it might be desirable to have virtual and read only quantities that are not real quantities used for storing values, but rather define an interface to other quantities. Examples for this are synonyms and derived quantities.

-

derived A Python callable that takes the containing section as input and outputs the value for this quantity. This quantity cannot be set directly, its value is only derived by the given callable. The callable is executed when this quantity is get. Derived quantities are always virtual.

-

cached A bool indicating that derived values should be cached unless the underlying section has changed.

-

virtual A boolean that determines if this quantity is virtual. Virtual quantities can be get/set like regular quantities, but their values are not (de-)serialized, hence never permanently stored.

-

-

query¶ To define quantities, instantiate

Quantityas a classattribute values in a section classes. The name of a quantity is automatically taken from its section class attribute. You can provide all other attributes to the constructor with keyword argumentsSee metainfo-sections to learn about section classes. In Python terms,

Quantityis a descriptor. Descriptors define how to get and set attributes in a Python object. This allows us to use sections like regular Python objects and quantity like regular Python attributes.Each quantity must define a basic data type and a shape. The values of a quantity must fulfil the given type. The default shape is a single value. Quantities can also have physical units. Units are applied to all values.

-

type Defines the datatype of quantity values. This is the type of individual elements in a potentially complex shape. If you define a list of integers for example, the shape would be list and the type integer:

Quantity(type=int, shape=['0..*']).The type can be one of:

a build-in primitive Python type:

int,str,bool,floatan instance of

MEnum, e.g.MEnum('one', 'two', 'three')a section to define references to other sections as quantity values

a custom meta-info

DataType, see Environmentsa numpy dtype, e.g.

np.dtype('float32')typing.Anyto support any value

If set to dtype, this quantity will use a numpy array or scalar to store values internally. If a regular (nested) Python list or Python scalar is given, it will be automatically converted. The given dtype will be used in the numpy value.

To define a reference, either a section class or instance of

Sectioncan be given. See metainfo-sections for details. Instances of the given section constitute valid values for this type. Upon serialization, references section instance will represented with metainfo URLs. See Resources.For quantities with more than one dimension, only numpy arrays and dtypes are allowed.

-

shape The shape of the quantity. It defines its dimensionality.

A shape is a list, where each item defines one dimension. Each dimension can be:

an integer that defines the exact size of the dimension, e.g.

[3]is the shape of a 3D spacial vectora string that specifies a possible range, e.g.

0..*,1..*,3..6the name of an int typed and shapeless quantity in the same section which values define the length of this dimension, e.g.

number_of_atomsdefines the length ofatom_positions

Range specifications define lower and upper bounds for the possible dimension length. The

*can be used to denote an arbitrarily high upper bound.Quantities with dimensionality (length of the shape) higher than 1, must be numpy arrays. Theire type must be a dtype.

-

is_scalar Derived quantity that is True, iff this quantity has shape of length 0

-

unit The physics unit for this quantity. It is optional.

Units are represented with the Pint Python package. Pint defines units and their algebra. You can either use pint units directly, e.g.

units.m / units.s. The metainfo provides a preconfigured pint unit registryureg. You can also provide the unit as pint parsable string, e.g.'meter / seconds'or'm/s'.

-

default The default value for this quantity. The value must match type and shape.

Be careful with a default value like

[]as it will be the default value for all occurrences of this quantity.

Quantities are mapped to Python properties on all section objects that instantiate the Python class/section definition that has this quantity. This means quantity values can be read and set like normal Python attributes.

In some cases it might be desirable to have virtual and read only quantities that are not real quantities used for storing values, but rather define an interface to other quantities. Examples for this are synonyms and derived quantities.

-

derived A Python callable that takes the containing section as input and outputs the value for this quantity. This quantity cannot be set directly, its value is only derived by the given callable. The callable is executed when this quantity is get. Derived quantities are always virtual.

-

cached A bool indicating that derived values should be cached unless the underlying section has changed.

-

virtual A boolean that determines if this quantity is virtual. Virtual quantities can be get/set like regular quantities, but their values are not (de-)serialized, hence never permanently stored.

-

-

entries¶ An optimized replacement for Quantity suitable for primitive properties.

The class MongoMetadata is used to tag metadata stored in mongodb.

-

class

nomad.datamodel.MongoMetadata¶ NOMAD entry quantities that are stored in mongodb and not necessarely in the archive.

-

m_def: Category = nomad.datamodel.datamodel.MongoMetadata:Category¶

-

The class EntryMetadata is used to represent all metadata about an entry.

-

class

nomad.datamodel.EntryMetadata(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

upload_id¶ The

upload_idof the calculations upload (random UUID).

-

calc_id¶ The unique mainfile based calculation id.

-

calc_hash¶ The raw file content based checksum/hash of this calculation.

-

pid¶ The unique persistent id of this calculation.

-

mainfile¶ The upload relative mainfile path.

-

domain¶ Must be the key for a registered domain. This determines which actual subclass is instantiated.

-

files¶ A list of all files, relative to upload.

-

upload_time¶ The time when the calc was uploaded.

-

uploader¶ An object describing the uploading user, has at least

user_id

-

processed¶ Boolean indicating if this calc was successfully processed and archive data and calc metadata is available.

-

last_processing¶ A datatime with the time of the last successful processing.

-

nomad_version¶ A string that describes the version of the nomad software that was used to do the last successful processing.

-

comment¶ An arbitrary string with user provided information about the entry.

-

references¶ A list of URLs for resources that are related to the entry.

-

uploader Id of the uploader of this entry.

Ids of all co-authors (excl. the uploader) of this entry. Co-authors are shown as authors of this entry alongside its uploader.

Ids of all users that this entry is shared with. These users can find, see, and download all data for this entry, even if it is in staging or has an embargo.

-

with_embargo¶ Entries with embargo are only visible to the uploader, the admin user, and users the entry is shared with (see shared_with).

-

upload_time The time that this entry was uploaded

-

datasets¶ Ids of all datasets that this entry appears in

-

m_def: Section = nomad.datamodel.datamodel.EntryMetadata:Section¶

-

upload_id The persistent and globally unique identifier for the upload of the entry

-

calc_id A persistent and globally unique identifier for the entry

-

calc_hash A raw file content based checksum/hash

-

mainfile The path to the mainfile from the root directory of the uploaded files

-

files The paths to the files within the upload that belong to this entry. All files within the same directory as the entry’s mainfile are considered the auxiliary files that belong to the entry.

-

pid The unique, sequentially enumerated, integer PID that was used in the legacy NOMAD CoE. It allows to resolve URLs of the old NOMAD CoE Repository.

-

raw_id¶ The code specific identifier extracted from the entrie’s raw files if such an identifier is supported by the underlying code

-

domain The material science domain

-

published¶ Indicates if the entry is published

-

processed Indicates that the entry is successfully processed.

-

last_processing The datetime of the last processing

-

processing_errors¶ Errors that occured during processing

-

nomad_version The NOMAD version used for the last processing

-

nomad_commit¶ The NOMAD commit used for the last processing

-

parser_name¶ The NOMAD parser used for the last processing

-

comment A user provided comment for this entry

-

references User provided references (URLs) for this entry

-

external_db¶ The repository or external database where the original data resides

-

uploader The uploader of the entry

-

origin¶ A short human readable description of the entries origin. Usually it is the handle of an external database/repository or the name of the uploader.

-

coauthors A user provided list of co-authors

All authors (uploader and co-authors)

-

shared_with A user provided list of userts to share the entry with

-

owners¶ All owner (uploader and shared with users)

-

license¶ A short license description (e.g. CC BY 4.0), that refers to the license of this entry.

-

with_embargo Indicated if this entry is under an embargo

-

upload_time The date and time this entry was uploaded to nomad

-

upload_name¶ The user provided upload name

-

datasets A list of user curated datasets this entry belongs to.

-

external_id¶ A user provided external id. Usually the id for an entry in an external database where the data was imported from.

-

last_edit¶ The date and time the user metadata was edited last

-

formula¶ A (reduced) chemical formula

-

atoms¶ The atom labels of all atoms of the entry’s material

-

only_atoms¶ The atom labels concatenated in order-number order

-

n_atoms¶ The number of atoms in the entry’s material

-

ems¶ Like quantities, sub-sections are defined in a section class as attributes of this class. An like quantities, each sub-section definition becomes a property of the corresponding section definition (parent). A sub-section definition references another section definition as the sub-section (child). As a consequence, parent section instances can contain child section instances as sub-sections.

Contrary to the old NOMAD metainfo, we distinguish between sub-section the section and sub-section the property. This allows to use on child section definition as sub-section of many different parent section definitions.

-

sub_section¶ A

Sectionor Python class object for a section class. This will be the child section definition. The defining section the child section definition.

-

repeats¶ A boolean that determines wether this sub-section can appear multiple times in the parent section.

-

-

dft¶ Like quantities, sub-sections are defined in a section class as attributes of this class. An like quantities, each sub-section definition becomes a property of the corresponding section definition (parent). A sub-section definition references another section definition as the sub-section (child). As a consequence, parent section instances can contain child section instances as sub-sections.

Contrary to the old NOMAD metainfo, we distinguish between sub-section the section and sub-section the property. This allows to use on child section definition as sub-section of many different parent section definitions.

-

sub_section A

Sectionor Python class object for a section class. This will be the child section definition. The defining section the child section definition.

-

repeats A boolean that determines wether this sub-section can appear multiple times in the parent section.

-

-

qcms¶ Like quantities, sub-sections are defined in a section class as attributes of this class. An like quantities, each sub-section definition becomes a property of the corresponding section definition (parent). A sub-section definition references another section definition as the sub-section (child). As a consequence, parent section instances can contain child section instances as sub-sections.

Contrary to the old NOMAD metainfo, we distinguish between sub-section the section and sub-section the property. This allows to use on child section definition as sub-section of many different parent section definitions.

-

sub_section A

Sectionor Python class object for a section class. This will be the child section definition. The defining section the child section definition.

-

repeats A boolean that determines wether this sub-section can appear multiple times in the parent section.

-

-

encyclopedia¶ Like quantities, sub-sections are defined in a section class as attributes of this class. An like quantities, each sub-section definition becomes a property of the corresponding section definition (parent). A sub-section definition references another section definition as the sub-section (child). As a consequence, parent section instances can contain child section instances as sub-sections.

Contrary to the old NOMAD metainfo, we distinguish between sub-section the section and sub-section the property. This allows to use on child section definition as sub-section of many different parent section definitions.

-

sub_section A

Sectionor Python class object for a section class. This will be the child section definition. The defining section the child section definition.

-

repeats A boolean that determines wether this sub-section can appear multiple times in the parent section.

-

-

apply_user_metadata(metadata: dict)¶ Applies a user provided metadata dict to this calc.

-

apply_domain_metadata(archive)¶ Used to apply metadata that is related to the domain.

-

Domains¶

The datamodel supports different domains. This means that most domain metadata of an

entry/calculation is stored in domain-specific sub sections of the EntryMetadata

section. We currently have the following domain specific metadata classes/sections:

-

class

nomad.datamodel.dft.DFTMetadata(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

m_def: Section = nomad.datamodel.dft.DFTMetadata:Section¶

-

basis_set¶ The used basis set functions.

-

xc_functional¶ The libXC based xc functional classification used in the simulation.

-

xc_functional_names¶ The list of libXC functional names that where used in this entry.

-

system¶ The system type of the simulated system.

-

compound_type¶ The compound type of the simulated system.

-

crystal_system¶ The crystal system type of the simulated system.

-

spacegroup¶ The spacegroup of the simulated system as number.

-

spacegroup_symbol¶ The spacegroup as international short symbol.

-

code_name¶ The name of the used code.

-

code_version¶ The version of the used code.

-

n_geometries¶ Number of unique geometries.

-

n_calculations¶ Number of single configuration calculation sections

-

n_total_energies¶ Number of total energy calculations

-

n_quantities¶ Number of metainfo quantities parsed from the entry.

-

quantities¶ All quantities that are used by this entry.

-

searchable_quantities¶ All quantities with existence filters in the search GUI.

-

geometries¶ Hashes for each simulated geometry

-

group_hash¶ Hashes that describe unique geometries simulated by this code run.

-

labels¶ The labels taken from AFLOW prototypes and springer.

-

labels_springer_compound_class¶ Springer compund classification.

-

labels_springer_classification¶ Springer classification by property.

-

optimade¶ Metadata used for the optimade API.

-

workflow¶ To define quantities, instantiate

Quantityas a classattribute values in a section classes. The name of a quantity is automatically taken from its section class attribute. You can provide all other attributes to the constructor with keyword argumentsSee metainfo-sections to learn about section classes. In Python terms,

Quantityis a descriptor. Descriptors define how to get and set attributes in a Python object. This allows us to use sections like regular Python objects and quantity like regular Python attributes.Each quantity must define a basic data type and a shape. The values of a quantity must fulfil the given type. The default shape is a single value. Quantities can also have physical units. Units are applied to all values.

-

type¶ Defines the datatype of quantity values. This is the type of individual elements in a potentially complex shape. If you define a list of integers for example, the shape would be list and the type integer:

Quantity(type=int, shape=['0..*']).The type can be one of:

a build-in primitive Python type:

int,str,bool,floatan instance of

MEnum, e.g.MEnum('one', 'two', 'three')a section to define references to other sections as quantity values

a custom meta-info

DataType, see Environmentsa numpy dtype, e.g.

np.dtype('float32')typing.Anyto support any value

If set to dtype, this quantity will use a numpy array or scalar to store values internally. If a regular (nested) Python list or Python scalar is given, it will be automatically converted. The given dtype will be used in the numpy value.

To define a reference, either a section class or instance of

Sectioncan be given. See metainfo-sections for details. Instances of the given section constitute valid values for this type. Upon serialization, references section instance will represented with metainfo URLs. See Resources.For quantities with more than one dimension, only numpy arrays and dtypes are allowed.

-

shape¶ The shape of the quantity. It defines its dimensionality.

A shape is a list, where each item defines one dimension. Each dimension can be:

an integer that defines the exact size of the dimension, e.g.

[3]is the shape of a 3D spacial vectora string that specifies a possible range, e.g.

0..*,1..*,3..6the name of an int typed and shapeless quantity in the same section which values define the length of this dimension, e.g.

number_of_atomsdefines the length ofatom_positions

Range specifications define lower and upper bounds for the possible dimension length. The

*can be used to denote an arbitrarily high upper bound.Quantities with dimensionality (length of the shape) higher than 1, must be numpy arrays. Theire type must be a dtype.

-

is_scalar¶ Derived quantity that is True, iff this quantity has shape of length 0

-

unit¶ The physics unit for this quantity. It is optional.

Units are represented with the Pint Python package. Pint defines units and their algebra. You can either use pint units directly, e.g.

units.m / units.s. The metainfo provides a preconfigured pint unit registryureg. You can also provide the unit as pint parsable string, e.g.'meter / seconds'or'm/s'.

-

default¶ The default value for this quantity. The value must match type and shape.

Be careful with a default value like

[]as it will be the default value for all occurrences of this quantity.

Quantities are mapped to Python properties on all section objects that instantiate the Python class/section definition that has this quantity. This means quantity values can be read and set like normal Python attributes.

In some cases it might be desirable to have virtual and read only quantities that are not real quantities used for storing values, but rather define an interface to other quantities. Examples for this are synonyms and derived quantities.

-

derived¶ A Python callable that takes the containing section as input and outputs the value for this quantity. This quantity cannot be set directly, its value is only derived by the given callable. The callable is executed when this quantity is get. Derived quantities are always virtual.

-

cached¶ A bool indicating that derived values should be cached unless the underlying section has changed.

-

virtual¶ A boolean that determines if this quantity is virtual. Virtual quantities can be get/set like regular quantities, but their values are not (de-)serialized, hence never permanently stored.

-

-

code_name_from_parser()¶

-

update_group_hash()¶

-

apply_domain_metadata(entry_archive)¶

-

band_structure_electronic(entry_archive)¶ - Returns whether a valid electronic band structure can be found. In

the case of multiple valid band structures, only the latest one is considered.

- Band structure is reported only under the following conditions:

There is a non-empty array of band_k_points.

There is a non-empty array of band_energies.

The reported band_structure_kind is not “vibrational”.

-

dos_electronic(entry_archive)¶ - Returns whether a valid electronic DOS can be found. In the case of

multiple valid DOSes, only the latest one is reported.

- DOS is reported only under the following conditions:

There is a non-empty array of dos_values_normalized.

There is a non-empty array of dos_energies.

The reported dos_kind is not “vibrational”.

-

band_structure_phonon(entry_archive)¶ - Returns whether a valid phonon band structure can be found. In the

case of multiple valid band structures, only the latest one is considered.

- Band structure is reported only under the following conditions:

There is a non-empty array of band_k_points.

There is a non-empty array of band_energies.

The reported band_structure_kind is “vibrational”.

-

dos_phonon(entry_archive)¶ - Returns whether a valid phonon dos can be found. In the case of

multiple valid data sources, only the latest one is reported.

- DOS is reported only under the following conditions:

There is a non-empty array of dos_values_normalized.

There is a non-empty array of dos_energies.

The reported dos_kind is “vibrational”.

-

traverse_reversed(entry_archive, path)¶ Traverses the given metainfo path in reverse order. Useful in finding the latest reported section or value.

-

-

class

nomad.datamodel.ems.EMSMetadata(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

m_def: Section = nomad.datamodel.ems.EMSMetadata:Section¶

-

chemical¶ An optimized replacement for Quantity suitable for primitive properties.

-

sample_constituents¶ An optimized replacement for Quantity suitable for primitive properties.

-

sample_microstructure¶ An optimized replacement for Quantity suitable for primitive properties.

-

experiment_summary¶ An optimized replacement for Quantity suitable for primitive properties.

-

origin_time¶ To define quantities, instantiate

Quantityas a classattribute values in a section classes. The name of a quantity is automatically taken from its section class attribute. You can provide all other attributes to the constructor with keyword argumentsSee metainfo-sections to learn about section classes. In Python terms,

Quantityis a descriptor. Descriptors define how to get and set attributes in a Python object. This allows us to use sections like regular Python objects and quantity like regular Python attributes.Each quantity must define a basic data type and a shape. The values of a quantity must fulfil the given type. The default shape is a single value. Quantities can also have physical units. Units are applied to all values.

-

type¶ Defines the datatype of quantity values. This is the type of individual elements in a potentially complex shape. If you define a list of integers for example, the shape would be list and the type integer:

Quantity(type=int, shape=['0..*']).The type can be one of:

a build-in primitive Python type:

int,str,bool,floatan instance of

MEnum, e.g.MEnum('one', 'two', 'three')a section to define references to other sections as quantity values

a custom meta-info

DataType, see Environmentsa numpy dtype, e.g.

np.dtype('float32')typing.Anyto support any value

If set to dtype, this quantity will use a numpy array or scalar to store values internally. If a regular (nested) Python list or Python scalar is given, it will be automatically converted. The given dtype will be used in the numpy value.

To define a reference, either a section class or instance of

Sectioncan be given. See metainfo-sections for details. Instances of the given section constitute valid values for this type. Upon serialization, references section instance will represented with metainfo URLs. See Resources.For quantities with more than one dimension, only numpy arrays and dtypes are allowed.

-

shape¶ The shape of the quantity. It defines its dimensionality.

A shape is a list, where each item defines one dimension. Each dimension can be:

an integer that defines the exact size of the dimension, e.g.

[3]is the shape of a 3D spacial vectora string that specifies a possible range, e.g.

0..*,1..*,3..6the name of an int typed and shapeless quantity in the same section which values define the length of this dimension, e.g.

number_of_atomsdefines the length ofatom_positions

Range specifications define lower and upper bounds for the possible dimension length. The

*can be used to denote an arbitrarily high upper bound.Quantities with dimensionality (length of the shape) higher than 1, must be numpy arrays. Theire type must be a dtype.

-

is_scalar¶ Derived quantity that is True, iff this quantity has shape of length 0

-

unit¶ The physics unit for this quantity. It is optional.

Units are represented with the Pint Python package. Pint defines units and their algebra. You can either use pint units directly, e.g.

units.m / units.s. The metainfo provides a preconfigured pint unit registryureg. You can also provide the unit as pint parsable string, e.g.'meter / seconds'or'm/s'.

-

default¶ The default value for this quantity. The value must match type and shape.

Be careful with a default value like

[]as it will be the default value for all occurrences of this quantity.

Quantities are mapped to Python properties on all section objects that instantiate the Python class/section definition that has this quantity. This means quantity values can be read and set like normal Python attributes.

In some cases it might be desirable to have virtual and read only quantities that are not real quantities used for storing values, but rather define an interface to other quantities. Examples for this are synonyms and derived quantities.

-

derived¶ A Python callable that takes the containing section as input and outputs the value for this quantity. This quantity cannot be set directly, its value is only derived by the given callable. The callable is executed when this quantity is get. Derived quantities are always virtual.

-

cached¶ A bool indicating that derived values should be cached unless the underlying section has changed.

-

virtual¶ A boolean that determines if this quantity is virtual. Virtual quantities can be get/set like regular quantities, but their values are not (de-)serialized, hence never permanently stored.

-

-

experiment_location¶ An optimized replacement for Quantity suitable for primitive properties.

-

method¶ An optimized replacement for Quantity suitable for primitive properties.

-

data_type¶ An optimized replacement for Quantity suitable for primitive properties.

-

probing_method¶ An optimized replacement for Quantity suitable for primitive properties.

-

repository_name¶ An optimized replacement for Quantity suitable for primitive properties.

-

repository_url¶ An optimized replacement for Quantity suitable for primitive properties.

-

entry_repository_url¶ An optimized replacement for Quantity suitable for primitive properties.

-

preview_url¶ An optimized replacement for Quantity suitable for primitive properties.

-

quantities¶ An optimized replacement for Quantity suitable for primitive properties.

-

group_hash¶ An optimized replacement for Quantity suitable for primitive properties.

-

apply_domain_metadata(entry_archive)¶

-

-

class

nomad.datamodel.OptimadeEntry(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

m_def: Section = nomad.datamodel.optimade.OptimadeEntry:Section¶

-

elements¶ Names of the different elements present in the structure.

-

nelements¶ Number of different elements in the structure as an integer.

-

elements_ratios¶ Relative proportions of different elements in the structure.

-

chemical_formula_descriptive¶ The chemical formula for a structure as a string in a form chosen by the API implementation.

-

chemical_formula_reduced¶ The reduced chemical formula for a structure as a string with element symbols and integer chemical proportion numbers. The proportion number MUST be omitted if it is 1.

-

chemical_formula_hill¶ The chemical formula for a structure in Hill form with element symbols followed by integer chemical proportion numbers. The proportion number MUST be omitted if it is 1.

-

chemical_formula_anonymous¶ The anonymous formula is the chemical_formula_reduced, but where the elements are instead first ordered by their chemical proportion number, and then, in order left to right, replaced by anonymous symbols A, B, C, …, Z, Aa, Ba, …, Za, Ab, Bb, … and so on.

-

dimension_types¶ List of three integers. For each of the three directions indicated by the three lattice vectors (see property lattice_vectors). This list indicates if the direction is periodic (value 1) or non-periodic (value 0). Note: the elements in this list each refer to the direction of the corresponding entry in lattice_vectors and not the Cartesian x, y, z directions.

-

nperiodic_dimensions¶ An integer specifying the number of periodic dimensions in the structure, equivalent to the number of non-zero entries in dimension_types.

-

lattice_vectors¶ The three lattice vectors in Cartesian coordinates, in ångström (Å).

-

cartesian_site_positions¶ Cartesian positions of each site. A site is an atom, a site potentially occupied by an atom, or a placeholder for a virtual mixture of atoms (e.g., in a virtual crystal approximation).

-

nsites¶ An integer specifying the length of the cartesian_site_positions property.

-

species_at_sites¶ Name of the species at each site (where values for sites are specified with the same order of the cartesian_site_positions property). The properties of the species are found in the species property.

-

structure_features¶ A list of strings that flag which special features are used by the structure.

disorder: This flag MUST be present if any one entry in the species list has a

chemical_symbols list that is longer than 1 element. - unknown_positions: This flag MUST be present if at least one component of the cartesian_site_positions list of lists has value null. - assemblies: This flag MUST be present if the assemblies list is present.

-

species¶ Like quantities, sub-sections are defined in a section class as attributes of this class. An like quantities, each sub-section definition becomes a property of the corresponding section definition (parent). A sub-section definition references another section definition as the sub-section (child). As a consequence, parent section instances can contain child section instances as sub-sections.

Contrary to the old NOMAD metainfo, we distinguish between sub-section the section and sub-section the property. This allows to use on child section definition as sub-section of many different parent section definitions.

-

sub_section¶ A

Sectionor Python class object for a section class. This will be the child section definition. The defining section the child section definition.

-

repeats¶ A boolean that determines wether this sub-section can appear multiple times in the parent section.

-

-

-

class

nomad.datamodel.encyclopedia.WyckoffVariables(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

m_def: Section = nomad.datamodel.encyclopedia.WyckoffVariables:Section¶

-

x¶ The x variable if present.

-

y¶ The y variable if present.

-

z¶ The z variable if present.

-

-

class

nomad.datamodel.encyclopedia.WyckoffSet(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

m_def: Section = nomad.datamodel.encyclopedia.WyckoffSet:Section¶

-

wyckoff_letter¶ The Wyckoff letter for this set.

-

indices¶ Indices of the atoms belonging to this group.

-

element¶ Chemical element at this Wyckoff position.

-

variables¶ Like quantities, sub-sections are defined in a section class as attributes of this class. An like quantities, each sub-section definition becomes a property of the corresponding section definition (parent). A sub-section definition references another section definition as the sub-section (child). As a consequence, parent section instances can contain child section instances as sub-sections.

Contrary to the old NOMAD metainfo, we distinguish between sub-section the section and sub-section the property. This allows to use on child section definition as sub-section of many different parent section definitions.

-

sub_section¶ A

Sectionor Python class object for a section class. This will be the child section definition. The defining section the child section definition.

-

repeats¶ A boolean that determines wether this sub-section can appear multiple times in the parent section.

-

-

-

class

nomad.datamodel.encyclopedia.LatticeParameters(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

m_def: Section = nomad.datamodel.encyclopedia.LatticeParameters:Section¶

-

a¶ Length of the first basis vector.

-

b¶ Length of the second basis vector.

-

c¶ Length of the third basis vector.

-

alpha¶ Angle between second and third basis vector.

-

beta¶ Angle between first and third basis vector.

-

gamma¶ Angle between first and second basis vector.

-

-

class

nomad.datamodel.encyclopedia.IdealizedStructure(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

m_def: Section = nomad.datamodel.encyclopedia.IdealizedStructure:Section¶

-

atom_labels¶ Type (element, species) of each atom.

-

atom_positions¶ Atom positions given in coordinates that are relative to the idealized cell.

-

lattice_vectors¶ Lattice vectors of the idealized structure. For bulk materials it is the Bravais cell. This cell is representative and is idealized to match the detected symmetry properties.

-

lattice_vectors_primitive¶ Lattice vectors of the the primitive unit cell in a form to be visualized within the idealized cell. This cell is representative and is idealized to match the detected symmemtry properties.

-

periodicity¶ Automatically detected true periodicity of each lattice direction. May not correspond to the periodicity used in the calculation.

-

number_of_atoms¶ Number of atoms in the idealized structure.”

-

cell_volume¶ Volume of the idealized cell. The cell volume can only be reported consistently after idealization and may not perfectly correspond to the original simulation cell.

-

wyckoff_sets¶ Like quantities, sub-sections are defined in a section class as attributes of this class. An like quantities, each sub-section definition becomes a property of the corresponding section definition (parent). A sub-section definition references another section definition as the sub-section (child). As a consequence, parent section instances can contain child section instances as sub-sections.

Contrary to the old NOMAD metainfo, we distinguish between sub-section the section and sub-section the property. This allows to use on child section definition as sub-section of many different parent section definitions.

-

sub_section¶ A

Sectionor Python class object for a section class. This will be the child section definition. The defining section the child section definition.

-

repeats¶ A boolean that determines wether this sub-section can appear multiple times in the parent section.

-

-

lattice_parameters¶ Like quantities, sub-sections are defined in a section class as attributes of this class. An like quantities, each sub-section definition becomes a property of the corresponding section definition (parent). A sub-section definition references another section definition as the sub-section (child). As a consequence, parent section instances can contain child section instances as sub-sections.

Contrary to the old NOMAD metainfo, we distinguish between sub-section the section and sub-section the property. This allows to use on child section definition as sub-section of many different parent section definitions.

-

sub_section A

Sectionor Python class object for a section class. This will be the child section definition. The defining section the child section definition.

-

repeats A boolean that determines wether this sub-section can appear multiple times in the parent section.

-

-

-

class

nomad.datamodel.encyclopedia.Bulk(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

m_def: Section = nomad.datamodel.encyclopedia.Bulk:Section¶

-

bravais_lattice¶ The Bravais lattice type in the Pearson notation, where the first lowercase letter indicates the crystal system, and the second uppercase letter indicates the lattice type. The value can only be one of the 14 different Bravais lattices in three dimensions.

Crystal system letters:

a = Triclinic m = Monoclinic o = Orthorhombic t = Tetragonal h = Hexagonal and Trigonal c = Cubic

Lattice type letters:

P = Primitive S (A, B, C) = One side/face centred I = Body centered R = Rhombohedral centring F = All faces centred

-

crystal_system¶ The detected crystal system. One of seven possibilities in three dimensions.

-

has_free_wyckoff_parameters¶ Whether the material has any Wyckoff sites with free parameters. If a materials has free Wyckoff parameters, at least some of the atoms are not bound to a particular location in the structure but are allowed to move with possible restrictions set by the symmetry.

-

point_group¶ Point group in Hermann-Mauguin notation, part of crystal structure classification. There are 32 point groups in three dimensional space.

-

space_group_number¶ Integer representation of the space group, part of crystal structure classification, part of material definition.

-

space_group_international_short_symbol¶ International short symbol notation of the space group.

-

structure_prototype¶ The prototypical material for this crystal structure.

-

structure_type¶ Classification according to known structure type, considering the point group of the crystal and the occupations with different atom types.

-

strukturbericht_designation¶ Classification of the material according to the historically grown “strukturbericht”.

-

-

class

nomad.datamodel.encyclopedia.Material(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

m_def: Section = nomad.datamodel.encyclopedia.Material:Section¶

-

material_type¶ “Broad structural classification for the material, e.g. bulk, 2D, 1D… “,

-

material_id¶ A fixed length, unique material identifier in the form of a hash digest.

-

material_name¶ Most meaningful name for a material if one could be assigned

-

material_classification¶ Contains the compound class and classification of the material according to springer materials in JSON format.

-

formula¶ Formula giving the composition and occurrences of the elements in the Hill notation. For periodic materials the formula is calculated fom the primitive unit cell.

-

formula_reduced¶ Formula giving the composition and occurrences of the elements in the Hill notation where the number of occurences have been divided by the greatest common divisor.

-

species_and_counts¶ The formula separated into individual terms containing both the atom type and count. Used for searching parts of a formula.

-

species¶ The formula separated into individual terms containing only unique atom species. Used for searching materials containing specific elements.

-

bulk¶ Like quantities, sub-sections are defined in a section class as attributes of this class. An like quantities, each sub-section definition becomes a property of the corresponding section definition (parent). A sub-section definition references another section definition as the sub-section (child). As a consequence, parent section instances can contain child section instances as sub-sections.

Contrary to the old NOMAD metainfo, we distinguish between sub-section the section and sub-section the property. This allows to use on child section definition as sub-section of many different parent section definitions.

-

sub_section¶ A

Sectionor Python class object for a section class. This will be the child section definition. The defining section the child section definition.

-

repeats¶ A boolean that determines wether this sub-section can appear multiple times in the parent section.

-

-

idealized_structure¶ Like quantities, sub-sections are defined in a section class as attributes of this class. An like quantities, each sub-section definition becomes a property of the corresponding section definition (parent). A sub-section definition references another section definition as the sub-section (child). As a consequence, parent section instances can contain child section instances as sub-sections.

Contrary to the old NOMAD metainfo, we distinguish between sub-section the section and sub-section the property. This allows to use on child section definition as sub-section of many different parent section definitions.

-

sub_section A

Sectionor Python class object for a section class. This will be the child section definition. The defining section the child section definition.

-

repeats A boolean that determines wether this sub-section can appear multiple times in the parent section.

-

-

-

class

nomad.datamodel.encyclopedia.Method(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

m_def: Section = nomad.datamodel.encyclopedia.Method:Section¶

-

method_type¶ Generic name for the used methodology.

-

core_electron_treatment¶ How the core electrons are described.

-

functional_long_name¶ Full identified for the used exchange-correlation functional.

-

functional_type¶ Basic type of the used exchange-correlation functional.

-

method_id¶ A fixed length, unique method identifier in the form of a hash digest. The hash is created by using several method settings as seed. This hash is only defined if a set of well-defined method settings is available for the used program.

-

group_eos_id¶ A fixed length, unique identifier for equation-of-state calculations. Only calculations within the same upload and with a method hash available will be grouped under the same hash.

-

group_parametervariation_id¶ A fixed length, unique identifier for calculations where structure is identical but the used computational parameters are varied. Only calculations within the same upload and with a method hash available will be grouped under the same hash.

-

gw_starting_point¶ The exchange-correlation functional that was used as a starting point for this GW calculation.

-

gw_type¶ Basic type of GW calculation.

-

smearing_kind¶ Smearing function used for the electronic structure calculation.

-

smearing_parameter¶ Parameter for smearing, usually the width.

-

-

class

nomad.datamodel.encyclopedia.Calculation(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

m_def: Section = nomad.datamodel.encyclopedia.Calculation:Section¶

-

calculation_type¶ Defines the type of calculation that was detected for this entry.

-

-

class

nomad.datamodel.encyclopedia.Energies(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

m_def: Section = nomad.datamodel.encyclopedia.Energies:Section¶

-

energy_total¶ Total energy.

-

energy_total_T0¶ Total energy projected to T=0.

-

energy_free¶ Free energy.

-

-

class

nomad.datamodel.encyclopedia.Properties(m_def: Optional[nomad.metainfo.metainfo.Section] = None, m_resource: nomad.metainfo.metainfo.MResource = None, **kwargs)¶ -

m_def: Section = nomad.datamodel.encyclopedia.Properties:Section¶

-

atomic_density¶ Atomic density of the material (atoms/volume).”

-

mass_density¶ Mass density of the material.

-